Ever since I got the AT&T 3B2/400 emulator working well enough to share publicly, I’ve received a lot of requests for it to support the (in-)famous 3B2 Ethernet Network Interface option card, commonly referred to by its EDT name, “NI”.

The NI card is based on the Common I/O Hardware (CIO) intelligent peripheral architecture, the same architecture used for the PORTS card and the CTC tape controller card. I’ve implemented support for both PORTS and CTC cards, so how hard could the NI card be, right?

Right.

But, I think at long last, I’ve finally cracked it.

Background

All CIO cards use the same microcontroller, an Intel 80186 running at 8 MHz, and use essentially the same boot ROM to manage getting the card bootstrapped. But after they’re bootstrapped, they actually need to run some sort of code to get their application-specific jobs done. The CTC card needs to know how to talk to its driver and how to talk to the QIC tape drive. The PORTS card needs to know how to talk to its driver and how to talk to the DUART chips that drive the serial lines. Same with the NI card.

Because the ROM on the board only holds the bootstrap code, not the

application code, the 3B2 is responsible for uploading an 80186 binary

image to the controller card’s RAM and then telling the card to start

executing it at a predefined entry point. This is called “pumping”. The

program that the card runs is just an 80186 COFF file sitting under the

/lib/pump/ directory.

But here’s the critical thing: to the UNIX kernel driver, the details of what the card is doing internally are totally opaque. The kernel driver does nothing but put work requests into a structure in the 3B2’s main memory, the Request Queue, and then interrupt the card to tell it about them. The card does some work, and then puts responses back into a different structure in the 3B2’s main memory, the Completion Queue, and interrupts the host in response.

This back-and-forth is pretty simple. The in-memory structure is well documented in the Driver Design Guide, making things pretty straight forward. Even better, for the PORTS and CTC card, source code for the kernel driver is available, making them pretty easy to understand! Reading the source reveals how the Kernel driver responds to interrupts, how it loads and unloads jobs from its job structures, and—probably most importantly—what opcodes it uses when talking with the card.

Getting to the Source

The main thing holding me back from getting NI support added to the emulator has been a lack of documentation and source code. As I mentioned above, the PORTS and CTC cards both have source code for the UNIX driver available, which let me see exactly how they behave and what they expect from the card.

The NI card, though, has no source available. There actually was a package

for the 3B2 called nisrc that provided the source code to the driver,

but I’ve never seen it in the wild, and I doubt it has been preserved

anywhere. I’ve combed my extensive collection of 3B2 software, disk

images, and tape images, but it’s not there.

So, what to do? Easy. Write a WE32100 disassembler and disassemble the kernel driver from its binary!

The Disassembler

I actually wrote a very basic WE32100 disassembler in Ruby years ago when I first started the 3B2 emulator project, so I could disassemble its ROM image. But it’s a lousy disassembler. It’s barely usable and assumes it’s disassembling a flat ROM with no sections or other internal structure. I needed something much better for disassembling an actual COFF file, so I wrote a slightly better (but still not great) disassembler, this time in Rust. The new version of the disassembler makes an attempt to handle both symbol names and relocation information, both essential to understanding a binary driver. It works just well enough to get the job done! (That said, there’s lots of room for improvement)

But What Is it Doing?

Disassembling the kernel driver was incredibly useful, especially because the object file is distributed unstripped, so all symbol names and relocation information are included (it actually has to be this way, because the kernel relies on the symbols to load and configure the driver at kernel build time… but that’s another story).

The symbol names were critical for me, personally, because they gave tips on what each function was for, little clues I could use to help understand what the functions are doing without going totally mad.

The main thing reading the disassembled code got me was an understanding of:

- How big the in-memory queue structures are and where they’re placed in memory.

- What opcodes the driver can send to the card.

- What the opcodes mean.

- How the card handles interrupts.

These are exactly what I needed to know. So, armed with this knowledge, I started implementing.

Failure Is Always An Option

Because I already have several emulated cards that use the same CIO architecture, getting a basic NI card spun up was pretty simple. I just cloned the PORTS card, essentially, and changed all the logic.

You might be wondering, “What about actually, like, handling packets and

hooking the emulator up to a real network?” Believe it or not, that was

the easy part, and it’s all thanks to the sim_ether library built into

the SIMH simulator platform. It makes the

job of sending and receiving packets from a real LAN truly trivial. The

canonical example is the PDP-11 XQ Ethernet card

simulation,

which I cribbed extensively from. SIMH has built-in support for PCAP,

TUN/TAP, and NAT

networking,

so, absolute kudos to the developers who wrote all of that and so I didn’t

have to.

The short and long of it is that I had a simulation that could send and receive packets in just one weekend of work. I was overjoyed!

But then, the simulator crashed. And it crashed again. And again. And when I investigated, I noticed two things: The driver was shutting itself off, and two of the three Request Queue structures were getting corrupted. What the heck was going on?

I tackled the crashing and the queue corruption problems separately, which is good, because in the end it turns out they were unrelated.

The Watchdog Timer

First, why all the crashing? Turns out it was related to the driver shutting itself off after 30 seconds. More careful investigation of the disassembled driver revealed that it is actually setting up a watchdog timer that will shut the driver off if it hasn’t received a heartbeat from the NI card at least once every 15 seconds. Not too long after the watchdog times out, the driver assumes the Ethernet is not connected to an AUI, and just puts the interface into a DOWN state. After this, it is no longer prepared to receive packets. Unfortunately, if another packet DOES come in, blam, the driver tries to read from memory it does not have permission to read from, and the kernel crashes with an MMU fault.

Fixing it turned out to be pretty easy: Set up a timer in the simulated card to write a heartbeat response into the Completion Queue every ten seconds. Worked like a charm, no more crashing. But, the Request Queues were still getting corrupted.

Corrupting the Queues, or: The Plot Thickens

This takes a little bit of background information.

The NI card makes use of three Request Queues and one Completion Queue to do its work. The kernel driver creates the queues in main system memory, tells the card where to find them, and lets it go on about its business.

Each of the request queues has eight slots in it, and two pointers, one for load and one for unload. The three Request Queues are used for three different purposes:

- A General Request Queue, used to tell the card to do something like send a packet, report status, turn the interface on, turn the interface off, etc.

- A Small Packet Receive Queue that has pointers to buffers big enough to hold packets of up to 126 bytes. Each slot in the queue has a tag, numbered “1” to “8”.

- A Large Packet Receive Queue that has pointers to buffers big enough to hold packets of up to 1500 bytes (Sorry, no support for jumbo frames!). Just like the small queue, each slot is tagged with a number from “9” to “16”.

The numbered tags are very important. They let the driver and the card agree on which slot (and, therefore, which buffer) each of the completion events corresponds to.

In and Out

When the host wants to send a packet, it puts a job into the General Request Queue with opcode 22, and then interrupts the card. The card handles the send, puts a reply in the Completion Queue, and goes on its merry way.

But receiving a packet is different, because it has to happen asynchronously. When the NI card gets a packet, it will judge the size of the packet it just got, and then choose either the Small Packet or Large Packet receive queue, as appropriate. It pulls a job request out of the selected queue, gets the pointer to the buffer from the job request, and uses DMA to write the packet into the 3B2’s main memory at the specified address. After that, it interrupts the host to say “Look at the Completion Queue, I just did some work!”

Those queues, the Small Packet Receive and Large Packet Receive queues, are set up initially by the kernel driver when it inits the card. It fills the slots with buffer pointers and numbers them correctly from 1 to 8 and from 9 to 16, respectively.

All of this seemed to be working correctly in the simulated card, at first blush. Packets would come in, the card would use the right queue, it would interrupt the host, and the host would process the response. Working great, right?

But actually, no. The queues were getting messed up. When I actually paused the simulator and looked at the queues in memory, I would see the slots tagged incorrectly. For example:

| Queue Slot | Tag | Buffer Pointer |

|---|---|---|

| 1 | 3 | 0x02105B82 |

| 2 | 3 | 0x02105F02 |

| 3 | 4 | 0x02105E82 |

| 4 | 5 | 0x02105E02 |

| 5 | 6 | 0x02105D82 |

| 6 | 7 | 0x02105D02 |

| 7 | 1 | 0x02105C82 |

| 8 | 2 | 0x02105F82 |

Due to a quirk in the driver, this actually still works. Mostly. But it is absolutely wrong. The tags should correspond exactly with the queue slot position, and be numbered from 1 to 8. This weird repeating 3 business and the lack of a tag numbered 8 was disturbing. I had to figure it out.

At the Mountains of Madness

At this point, I started single stepping through the card initialization routine, the part where the kernel driver sets up the queues.

Indeed, it sets them up in order, starting with the Small Packet Receive queue, tag number 1. It allocates a buffer and then inserts the entry into the first slot, increments the queue’s Load Pointer, and moves onto the next slot.

But when it gets to slot number 8, the last one, it gives up. It actually refuses to build it, and just goes on to the next queue, the Large Packet receive queue. The Large Packet receive queue goes exactly the same way. It builds slots 9 through 15, but gives up on building slot 16, leaving it empty.

I puzzled over this for a while. I read the source code. The logic is 100% sound. When it gets to the last entry in each queue, it asks itself a question: “If I were to increment my Load Pointer, would it be the same as the Unload Pointer?” If it would, there’s no more room in the queue. If it were to increment the pointer, the queue would appear to be empty instead of full. So, it really can’t.

The Load Pointer and Unload Pointer are both initialized to 0. Each entry

in the queue is 12 bytes long, so the Load Pointer is incremented by 12

bytes each time a slot is added (the kernel driver never touches the

Unload Pointer, only the card can do that). When it gets to the

penultimate entry, the load pointer is incremented to 0x54, 84 bytes, 7

entries. If it tried to add one more, it would wrap around to 0x00, and

thus the queue would appear empty, so this is strictly forbidden. It

stops building entries and moves on, thinking its job is complete.

So, I needed to know: Is this how the real 3B2 does it?

Bringing Gideon Online

I do not have much in the way of real 3B2 hardware. My workhorse is a 3B2/310 named Gideon. Gideon has 2 MB of RAM and a single 72 MB hard disk. Because of its relatively small RAM size, and because the NI driver and the TCP/IP stack are such hogs, I’ve never used an NI card in it. No need to. But, long story short, I took my one and only very precious NI card and installed it into Gideon, added the NI driver and the TCP/IP stack from Wollongong, and wrote a small C program to dump the CIO queues out of kernel memory immediately after the card was initialized.

What I found was that all eight slots in both queues were fully

configured, numbered with tags 1–8 and 9–16. It hadn’t skipped

slots 8 and 16. The Load Pointers had wrapped back around to 0x00. But,

critically, the Unload Pointers had incremented to 0x24. Three jobs had

been taken, but no packets had been received yet! They couldn’t have been:

It was on an isolated LAN with nobody else connected. This was a super

important clue.

At this point, I concluded that there was only one thing I could do. I had to understand the code that the card was running on its 80186 processor. Maybe it was doing something weird at startup to fiddle with the Unload Pointers?

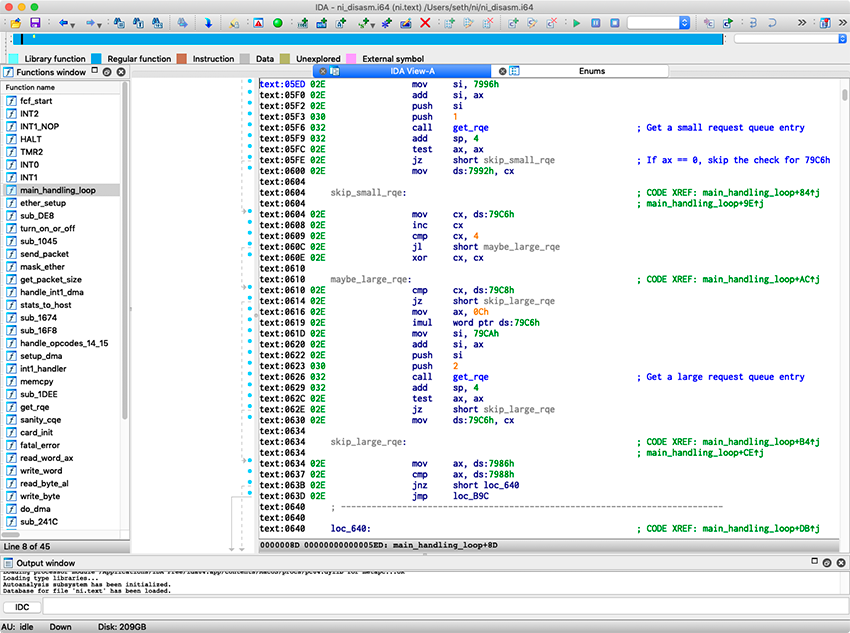

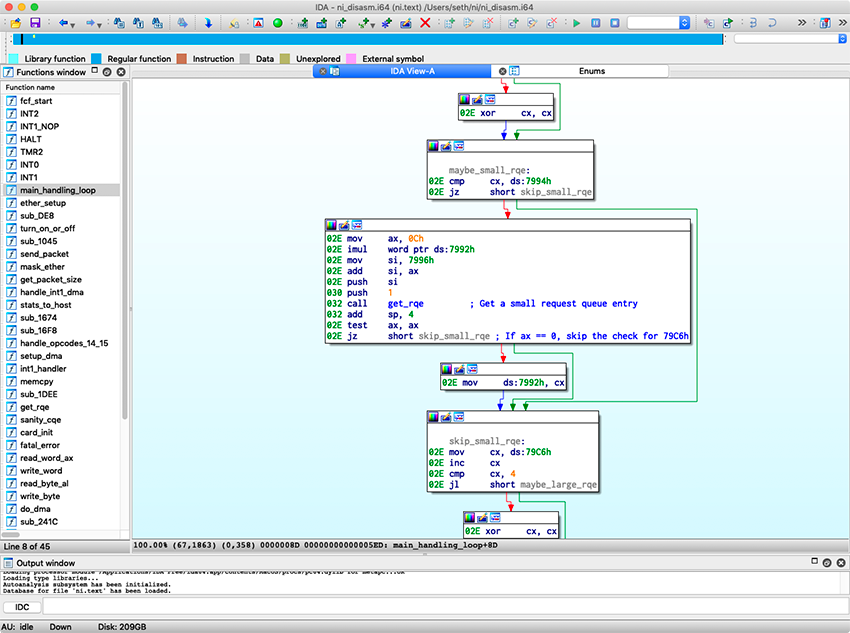

Taking IDA Pro For A Ride

It turns out that there isn’t much available in the way of really good free disassemblers that support the 80186. I was this close to trying to write my own when I decided to take another look at IDA Pro, the interactive disassembler from Hex-Rays.

For those not in the know: IDA Pro is the industry standard in disassemblers. Malware researchers use it all the time. It has a steep learning curve, no “undo” command, and it costs $2,819 (!!!!). But, fortunately, they have a stripped down freeware version that you can use for non-commercial use. It doesn’t support very many processors, but it does support 16- and 32-bit x86!, so it was absolutely perfect for my uses. I have no commercial interest in the 3B2 emulator, and never plan to.

IDA Pro is pretty cool, once you get used to it. I used it to take apart the pump code that gets uploaded to the NI card on initialization. It allows you to look at the disassembly in Intel syntax, or at a flow chart of calls and branch points.

Testing My Limits

Now, I’ve never been good at Intel x86 assembly. I just never did much of it, never read much of it, never got into it. I had to change that fast if I had any hope of understanding this.

Frankly, it took a couple of weeks for me to get comfortable enough with what was going on to even begin to understand the pump code. Remember, there are no symbols in the firmware. So it was up to me to break out the 80186 manual and start to understand the processor.

I was also helped by one more very vital resource. The 3B2 Technical Reference Manual, which I finally turned up in 2017, has some details on the NI card’s 80186 memory map, showing what various addresses correspond to. Without that and the 80186 manual, I’d have been hopelessly lost.

And? So? What is it doing?

The fact is, I was pulling my hair out. I was trying to test possibilities one by one, and each time I tried to see if my guess was right, it turned out my guess was wrong. Nothing, and I mean nothing in the code could explain how the real 3B2 filled up all of its queue slots, while the emulator did not.

Finally — FINALLY! — late last night, I figured it out. And, when I did, I was reminded of the Sherlock Holmes quip used in so many of his stories,

When you have eliminated all which is impossible, then whatever remains, however improbable, must be the truth.

Under The Hood

You see, the NI card is not nearly as interrupt driven as I had believed it to be.

CIO cards are driven by two interrupts that the 3B2 can trigger by reading specific memory addresses: INT0 and INT1. These map directly to the 80186’s INT0 and INT1 lines.

The normal startup procedure for any CIO card after it has been pumped is for the 3B2 to send it an INT0 and then an INT1, in that order. The NI pump code at startup disables all interrupts except for INT0, then in the INT0 handler disables all interrupts except for INT1. When it does then receive the INT1, it performs a SYSGEN, and then immediately starts polling the two Packet Receive Queues, looking for available jobs.

Polling? Yes, polling! The main INT1 handler never returns (well, in truth it has a few terminal conditions that will send it into an infinite loop on a fatal failure, but that’s neither here nor there), it just loops back around if there was nothing for it to do. When it actually does see a job available in either of the two packet receive queues, it will immediately grab it and cache it for later use if and when it receives a packet. It will cache up to three slots like this, and, like always, when it grabs a job off the queue, it increments the Unload Pointer.

[And, as an aside, how the heck do you tell it about work in the General Request Queue, then? This is actually a nifty trick. In the main loop, the card installs a different INT1 handler that does nothing but set a flag and then return. The main loop looks for this flag, and if it’s set, it knows it has a job pending in the General Request Queue. Neat.]

The NI card is polling the Receive Queues from the very moment that it is initialized. The 3B2 kernel driver doesn’t actually populate those queues with jobs for many milliseconds, so the NI card just loops, watching them.

This, then, was my breakthrough. I realized, hey, during initialization of the queue structures, the 3B2 has to do a lot of work for each slot. It does a lot of system calls. It allocates buffers, frees buffers, talks to the STREAMS subsystem, and so on. It takes a few milliseconds to build a queue entry, it’s not instant.

What must be happening is that the card is polling the queue quite rapidly. It takes much less time for it to loop than it does to for the 3B2 to actually fill a slot. So, as soon as it sees a job available, it takes it and increments its unload pointer.

I hope you can see where this is going.

By the time the kernel driver has reached the last slot in the queue,

the card has already taken and cached three jobs for later use. It

has incremented its Unload Pointer to 0x24. So, on the last slot,

when the driver asks itself the question “If I were to increment my

Load Pointer, would it overwrite the Unload Pointer?”, the answer is

now NO, and it can do so. It fills the slot and the load pointer wraps

around to slot 0. All 8 slots get populated, and everyone is happy.

Talk about a head smacker. I would never have guessed that the card races the queue initialization like this. It took me forever for me to prove it to myself.

How To Fix It

So now my task is clear. Today, I will fix the emulated NI card.

There are two possibilities, and I’m going to explore both:

-

Actually poll the queues very rapidly after init, but stop the rapid polling once up to three jobs are cached.

-

Cheat! Fill the Unload Pointer with a junk value like

0xffso the queue can get filled, and then set a timout in the simulator so the card can try to take some jobs after a few milliseconds.

To be honest, while option 1 is more faithful to the real card, option 2 is very attractive because it’s much less complicated.

I’m going to just explore both and see how it pans out.

Conclusion

So, there you have it. At long last, after weeks of work, I understand what’s going on, and I understand how to fix it.

And if you’ve managed to actually read this far, by God, you deserve a cookie.

Comments